前言

本篇主要讲 LSTM 的参数计算和 Keras TimeDistributed 层的使用。LSTM 的输入格式为:[Simples,Time Steps,Features]

- Samples. One sequence is one sample. A batch is comprised of one or more samples.(就是有几条数据)

- Time Steps. One time step is one point of observation in the sample.(时间步长,通常对应时间序列的长度)

- Features. One feature is one observation at a time step.(一个时间步长对应的向量长度)

举个文本的例子,原始一个 batch_size=50, 一行文本的长度 max_len=10, 所以输入矩阵为 [50,10],embedding 成 300 维的向量后,格式为 [50,10,300],分别对应 Simples,Time Steps,Features。

另外一个需要设置的参数是 LSTM 的输出维度 (Output_dim),比如为 128,LSTM 的参数量计算为

计算 LSTM 层的参数

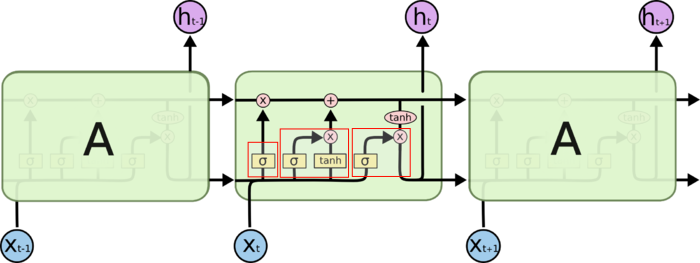

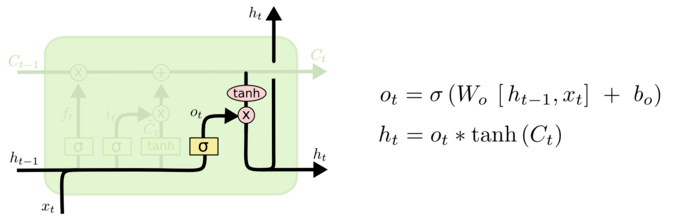

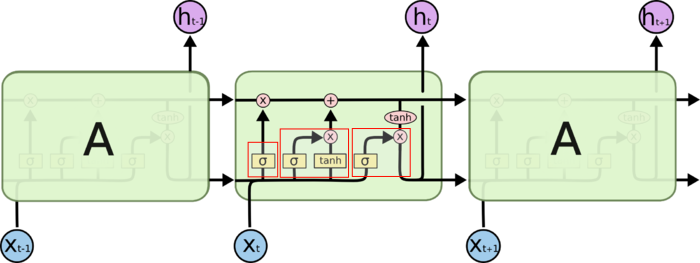

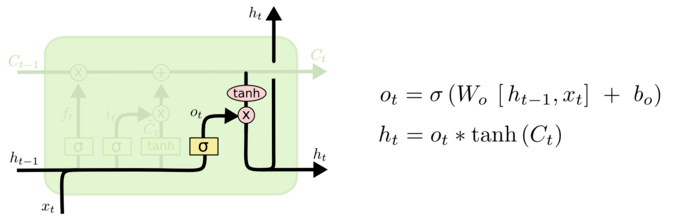

上面计算 LSTM 参数数目的方法是怎么来的呢?首先理解 RNN 权重共享。对于 LSTM,cell 的权重是共享的,这是什么意思呢?这是指图片上三个绿色的大框,即三个 cell ,但是实际上,它只是代表了一个 cell 在不同时序时候的状态,所有的数据只会通过一个 cell,然后不断更新它的权重。

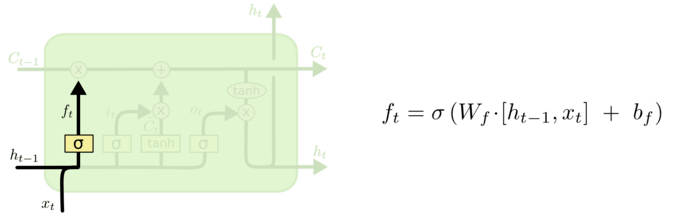

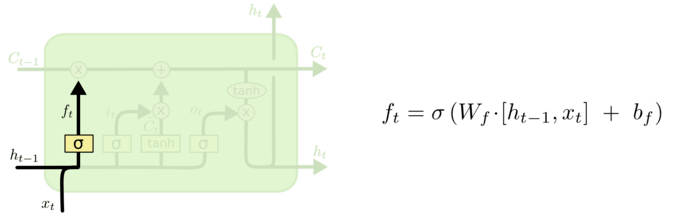

我们现在来考虑遗忘门:

图中的公式 $h{t-1}$ 的长度是 Output_dim, $[h{t-1},x{t}]$ 的长度就是 Output_dim+Features, $W{f}$ 和 $b{f}$ 为该层的参数。 $W{f}$ 的大小为 (Outputdim+Features)xOutput_dim,$b{f}$ 的大小为 Output_dim,所以这个门总的参数为:

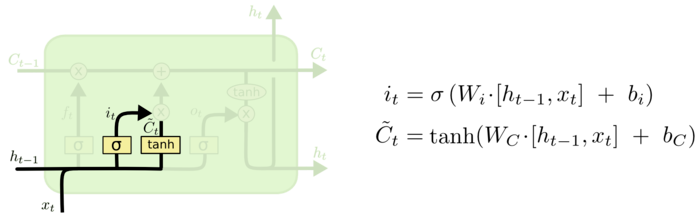

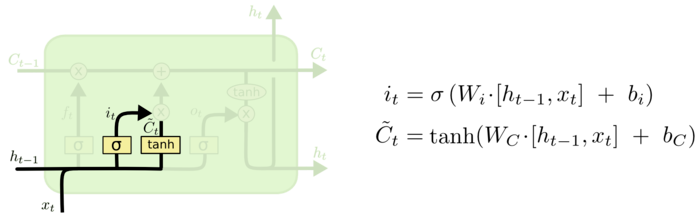

同样的更新门,有两倍的遗忘门参数。

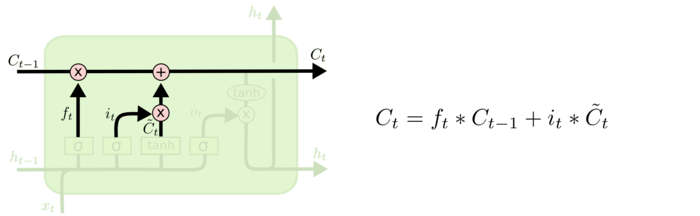

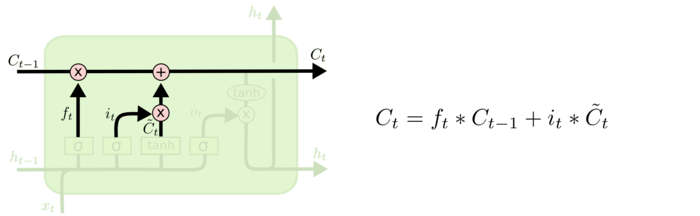

更新过程中没有参数需要学习。

输出门参数和遗忘门一样多。

一对一序列预测

首先做一个序列的问题,假设有数据 X 和数据如下,然后用 LSTM 做序列预测:

1

2

| X:[ 0. 0.2 0.4 0.6 0.8]

Y:[ 0. 0.2 0.4 0.6 0.8]

|

代码很简单,结果正确:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

| from keras.models import Sequential

from keras.layers import Dense

from keras.layers import LSTM

from numpy import array

length = 5

seq = array([i/float(length) for i in range(length)])

X = seq.reshape(len(seq), 1, 1)

y = seq.reshape(len(seq), 1)

n_neurons = length

n_batch = length

n_epoch = 1000

model = Sequential()

model.add(LSTM(n_neurons, input_shape=(1, 1)))

model.add(Dense(1))

model.compile(loss='mean_squared_error', optimizer='adam')

print(model.summary())

model.fit(X, y, epochs=n_epoch, batch_size=n_batch, verbose=2)

result = model.predict(X, batch_size=n_batch, verbose=0)

for value in result:

print('%.1f' % value)

|

下面我们来手动计算一下LSTM层的参数:

1

2

3

4

| n = 4 * ((inputs + 1) * outputs + outputs^2)

n = 4 * ((1 + 1) * 5 + 5^2)

n = 4 * 35

n = 140

|

全连接层的参数计算如下:

1

2

3

| n = inputs * outputs + outputs

n = 5 * 1 + 1

n = 6

|

和Keras打印的参数一致:

1

2

3

4

5

6

7

8

9

10

11

| _________________________________________________________________

Layer (type) Output Shape Param

=================================================================

lstm_1 (LSTM) (None, 1, 5) 140

_________________________________________________________________

dense_1 (Dense) (None, 1, 1) 6

=================================================================

Total params: 146.0

Trainable params: 146

Non-trainable params: 0.0

_________________________________________________________________

|

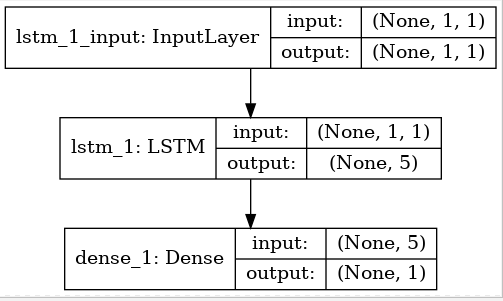

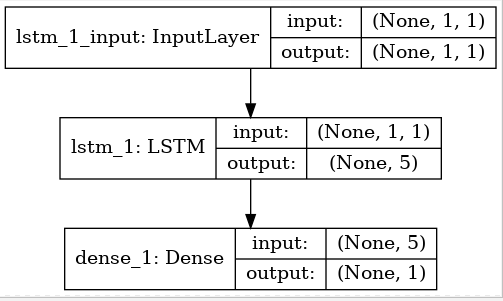

模型的结构图如下:

多对一序列预测

同样准备数据:

1

2

3

4

5

6

| X1: 0

X2: 0.2

X3: 0.4

X4: 0.6

X5: 0.8

Y :[ 0. 0.2 0.4 0.6 0.8]

|

代码如下:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

| from numpy import array

from keras.models import Sequential

from keras.layers import Dense

from keras.layers import LSTM

length = 5

seq = array([i/float(length) for i in range(length)])

X = seq.reshape(1, length, 1)

y = seq.reshape(1, length)

n_neurons = length

n_batch = 1

n_epoch = 500

model = Sequential()

model.add(LSTM(n_neurons, input_shape=(length, 1)))

model.add(Dense(length))

model.compile(loss='mean_squared_error', optimizer='adam')

print(model.summary())

model.fit(X, y, epochs=n_epoch, batch_size=n_batch, verbose=2)

result = model.predict(X, batch_size=n_batch, verbose=0)

for value in result[0,:]:

print('%.1f' % value)

|

用Keras打印网络结构如下:

1

2

3

4

5

6

7

8

9

10

11

| _________________________________________________________________

Layer (type) Output Shape Param

=================================================================

lstm_1 (LSTM) (None, 5) 140

_________________________________________________________________

dense_1 (Dense) (None, 5) 30

=================================================================

Total params: 170.0

Trainable params: 170

Non-trainable params: 0.0

_________________________________________________________________

|

这一次的参数有170个。原因是全连接层参数变多了,计算如下;

1

2

3

| n = inputs * outputs + outputs

n = 5 * 5 + 5

n = 30

|

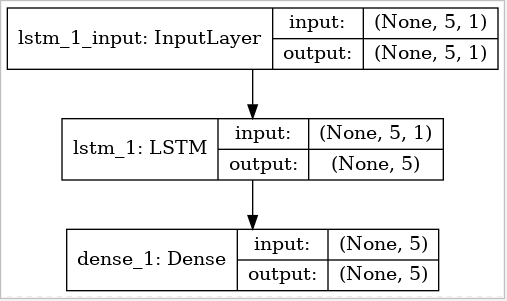

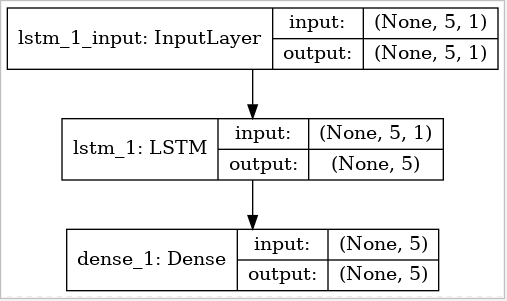

整体的模型结构如下:

带 TimeDistributed 的多对多 LSTM 序列预测

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

| from numpy import array

from keras.models import Sequential

from keras.layers import Dense

from keras.layers import TimeDistributed

from keras.layers import LSTM

length = 5

seq = array([i/float(length) for i in range(length)])

X = seq.reshape(1, length, 1)

y = seq.reshape(1, length, 1)

n_neurons = length

n_batch = 1

n_epoch = 1000

model = Sequential()

model.add(LSTM(n_neurons, input_shape=(length, 1), return_sequences=True))

model.add(TimeDistributed(Dense(1)))

model.compile(loss='mean_squared_error', optimizer='adam')

print(model.summary())

model.fit(X, y, epochs=n_epoch, batch_size=n_batch, verbose=2)

result = model.predict(X, batch_size=n_batch, verbose=0)

for value in result[0,:,0]:

print('%.1f' % value)

|

用 Keras 打印网络结构如下:

1

2

3

4

5

6

7

8

9

10

11

| _________________________________________________________________

Layer (type) Output Shape Param

=================================================================

lstm_1 (LSTM) (None, 5, 5) 140

_________________________________________________________________

time_distributed_1 (TimeDist (None, 5, 1) 6

=================================================================

Total params: 146.0

Trainable params: 146

Non-trainable params: 0.0

_________________________________________________________________

|

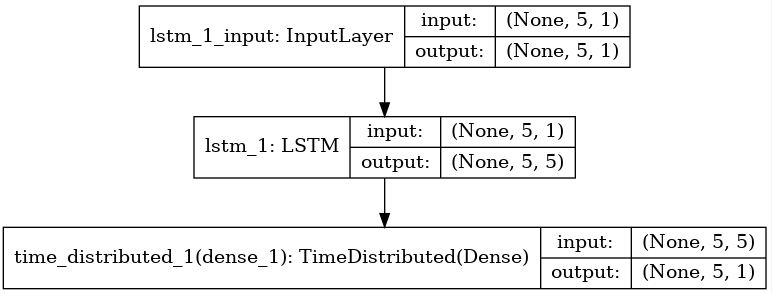

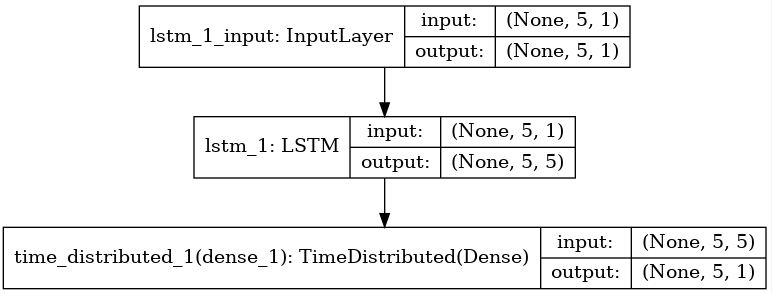

整体的模型结构如下:

Reference

- How to Use the TimeDistributed Layer for Long Short-Term Memory Networks in Python